This session I was focusing on trying to work out custom templates. I also recognized that a major problem I have is overlooking the simple solutions.. like using the help documentation for blade.

The session had some successes and produced it's own set of problems than need to be looked into before going back into the studio.

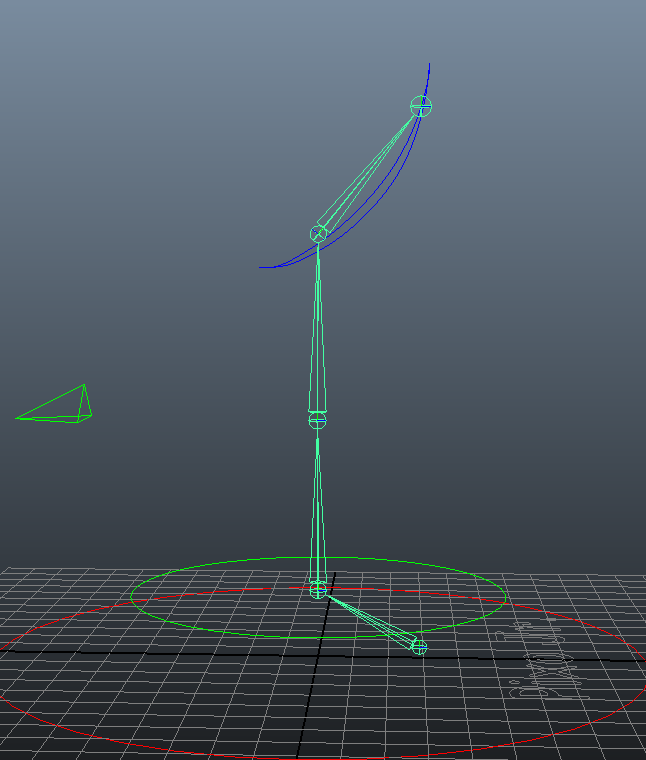

The setup we ended up landing on for this session marker wise was as follows:

The three markers on the head would be used for the rotation with their placement helping to track the direction of the face. The translation of the top half would be taken from the distance between those around the shoulders and the hips. The main part of the acting would come from the hip movements. The translation of the bottom half of the lamp would come from the information given between the hips and the knees and then the rotation for the base would come from the ankle and foot markers. The markers were place along the central part of the body especially when placing them on the toes as the legs would be held together some how.

This marker setup proved to have some problems with occlusion and swapping between the marker on the chin and the marker on the chest. This could be solved in two ways:

Either move the central marker on the chest to be on both shoulders like this

Either move the central marker on the chest to be on both shoulders like this

Or as was suggested by Norbert, use the multiply node in maya to take the movement from the knee area and reverse it to bend the right way. Eliminating the need for the shoulder/chest markers at all.

This is a particularly interesting suggestion as it may yield more realistic results in terms of range of movement.

Actually creating the custom skeleton in blade proved to be quite a task. While there is functionality to build your own structure we were unable to find a way of renaming the markers or applying a custom set of bones to them meaning the session didn't result in any actual capture other than the initial ROM

Before the next session I want to look into applying custom bones (probably looking towards dynamic props for a solution) and also test out the ability to apply only the rotation of the source to a target rig to test out that ability.

Before the next session I want to look into applying custom bones (probably looking towards dynamic props for a solution) and also test out the ability to apply only the rotation of the source to a target rig to test out that ability.

.jpg)