As I've chosen to retarget to the lamp rig there's a few things I need to do.

Look at the rig and how its constructed

Figure out how to translate movement from the source data can be applied across (marker placement).

Create motion analysis on how the lamp should move to help inform how successful the final result is.

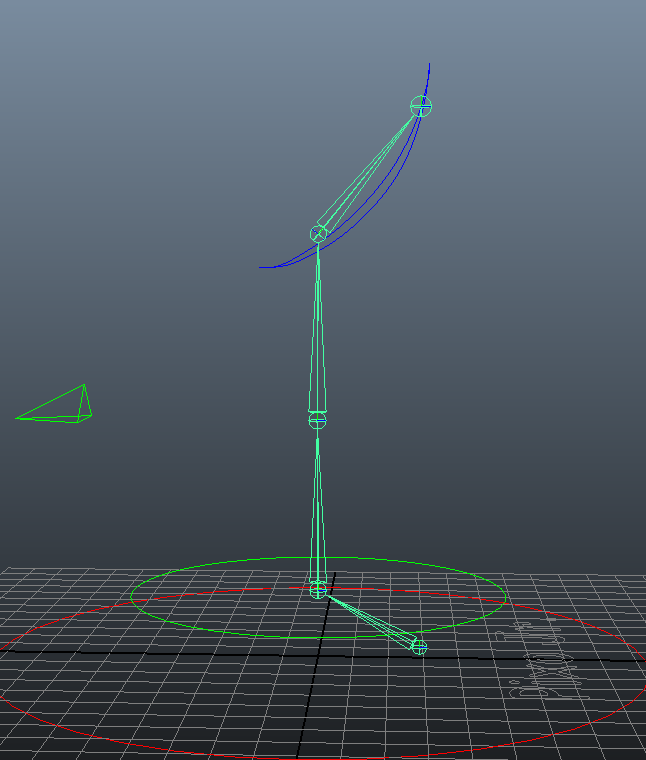

The Rig

So the rig for the lamp is pretty simple in the grand scheme of things which is handy. Also the structure should be useful when figuring out where the markers should be placed in order to get the right sort of movement from the actor during capture.

Marker placement on people

First sort of analysis led pretty easily to this marker setup. It takes into account the joints in the rig and joints on the actor.

But when watching this, one of the poses jumped out as being an awkward one to manage:

the pose seen in the images below demonstrates a couple of issues with the current setup. The first being something that would need to be considered during the capture process concerning props to help with balance when the lamp is over extended. The second being the positioning of the head. The proposed solution in the image below actually might not help at all. At present there are five joints driving 2 in order to control the head but this might cause some issues for head mobility. I'd like to try some posing tests where I take images of the lamp in various poses and then get people to replicate them. This might help highlight some more issues that might need to influence changes to the marker system or more need for props during capture. I'll be paying close attention in particular to the head movement.

Motion analysis Lamp movement.

I've done a bit of motion analysis for lamp movement based mainly on Luxo Jr in the Pixar short. I also tried to look for movement which had a similar structure and it led me to believe that the best reference of movement for an angle poise lamp would probably need to come from a three legged cat with the front leg being the one thats missing. This is due to the bone structure of the front of the leg, neck and head, the agility a cat poses and the weight needed to be placed on the single limb to add emphasis (although a regular cat was working out fine)

While doing this motion research I started to consider how to measure success and I came to the conclusion that the best way of testing would be probably to hand animate a scene with the lamp rig I have and then try and get the motion capture to be able to achieve a similar thing. So there's a task that I need to do pretty promptly.

Later today

Technical side, looking at creating a custom vst (vicon skeleton template) I think it might involve manually labeling the markers in order to achieve something but i'm wondering if you can use the human skeleton and then just not record some parts.. would it complain?

By using the existing human template but then labeling the markers wrong (to give the illusion of the lamps structure) might give interesting results in the viewport but using selective retargetting later on in the pipeline this could prove to be a beneficial technique. Further more if you could choose to turn off certain limbs such as you can do with some rigs in maya in order to gain better visibility on the current focus this might limit the distraction of the parts of the existing template that aren't labelled in the traditional manner.

Things I want to try (in a more concise manner)

- Selective markers applied to existing human template (traditional labeling, i.e. the hip is the hip)

- Attempt to make a custom template (although i've found no help online in regards to doing this)

- Complete skeleton, using existing template that labels in a way that forms structure of lamp within viewport (likely to be messy)

Extra thoughts:

Adding more subtle performance. Performance is still a major factor in what this project is trying to achieve. Ending up with a lamp that moves technically correctly is what I'm hoping to build on using this process which is why certain aspects are being considered.

For movements in the neck it might be nice to use the extra joint to influence the angle between 2 and 3.. maybe giving the option of blending between the two angles during the cleanup process? this could help to plus the performance at key moments.

Arms need to be kept from helping to add movement.. knees need to be kept together but not sure how the distance between the hips and the ankles can be kept consistent... might need to re consider where the markers are on the lower section of the body.

.jpg)